Music can have a powerful effect on our emotions. Reading cybernetics professor Slawomir Nasuto and colleagues are developing systems that can monitor activity in the brain and use this information to produce music to change how we feel. They explain more in a new post for The Conversation.

Whether it’s the music that was playing on the radio when you met your partner or the first song your baby daughter smiled to, for many of us, music is a core part of life. And it’s no wonder – there is considerable scientific evidence that foetuses experience sounds while in the womb, meaning music may affect us even before we are born.

Music can leave us with a sense of transcendental beauty or make us reach for the ear plugs. In fact, it is almost unparalleled among the arts in its ability to quickly generate an incredibly wide range of powerful emotions. But what happens in our brains and bodies when we emotionally react to music has long been a bit of a mystery. A mystery that researchers have only recently started to explore and understand.

Building on this growing understanding, we have developed neural technology – a combination of hardware and software systems that interact with the human brain – that can enhance our emotional interaction with music.

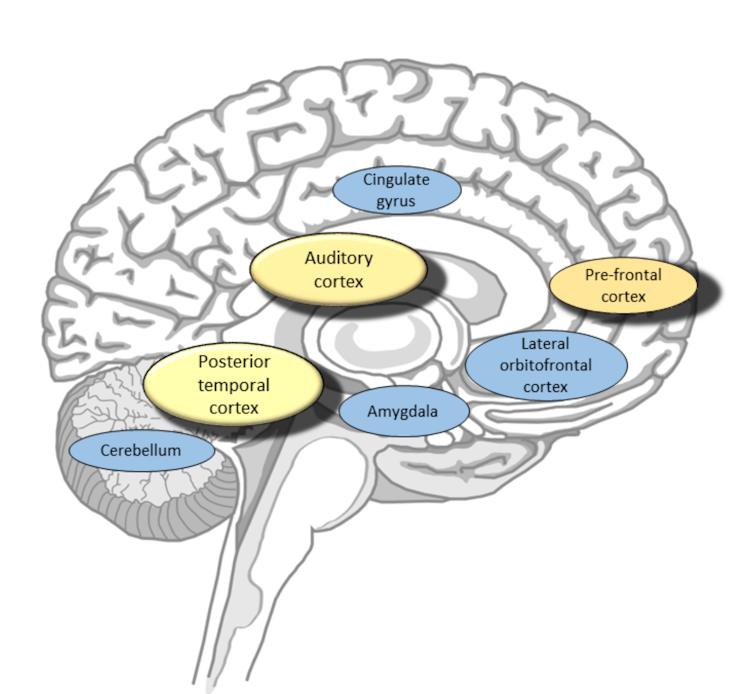

Numerous studies have shown that listening to music leads to changes in activity in core brain networks known to underpin our experience of emotion. These networks include the deep brain areas such as the amygdala, cerebellum, and cingulate gyrus. But they also consist of cortical areas on the surface of the brain including the auditory cortex, posterior temporal cortex, and the prefrontal cortex.

We also know that music has the ability to affect the way in which the body behaves. Our heart rate rises as we listen to exciting music, while our blood pressure can be lowered by calm, soothing music. Scary music, on the other hand, can make us sweat and raise goose bumps.

The way in which the brain responds to music can be measured by modern neural technology. For example, changes in how happy or unhappy we feel are reflected in brain activity within the prefrontal cortex. This can be measured by the electroencephalogram (EEG), which tracks and records brain-wave patterns via sensors placed on the scalp. We can also measure changes in our level of excitement or fear by measuring heart rate.

Neurotechnology of music

Researchers are increasingly experimenting with new ways neural technology can be used to enhance our interactions with art and music. For example, a recent project explored how neural technology can be used to enhance a dance performance by adjusting the staging to the emotions of the dancers.

The possibility of detecting and measuring physiological data that can be correlated with emotional states also opens the door for the development of new technologies for healthcare and well-being. We are interested in developing systems that can monitor activity in the brain and use this information to produce music to change how we feel.

For instance, imagine a device that can detect when you are falling into a state of depression (as evidenced by, for example, anunusual spiking activity in the EEG), and use this information to trigger an algorithm that generates bespoke music to make you feel happier. This approach is likely to be effective. Indeed, recent research has shown, in a large meta-analysis of 1,810 music therapy patients, that music can reduce depression levels.

We have recently completed a major research project during which we built a proof-of-concept system that does exactly this. We put together a research team comprising neuroscientists, biomedical engineers, musicians, and sound engineers to explore how different types of music change our emotions. We used this knowledge to build a brain-computer music interface, a system that watches for specific patterns of brain activity associated with different emotions. We then developed algorithms to generate music aimed at changing our emotions.

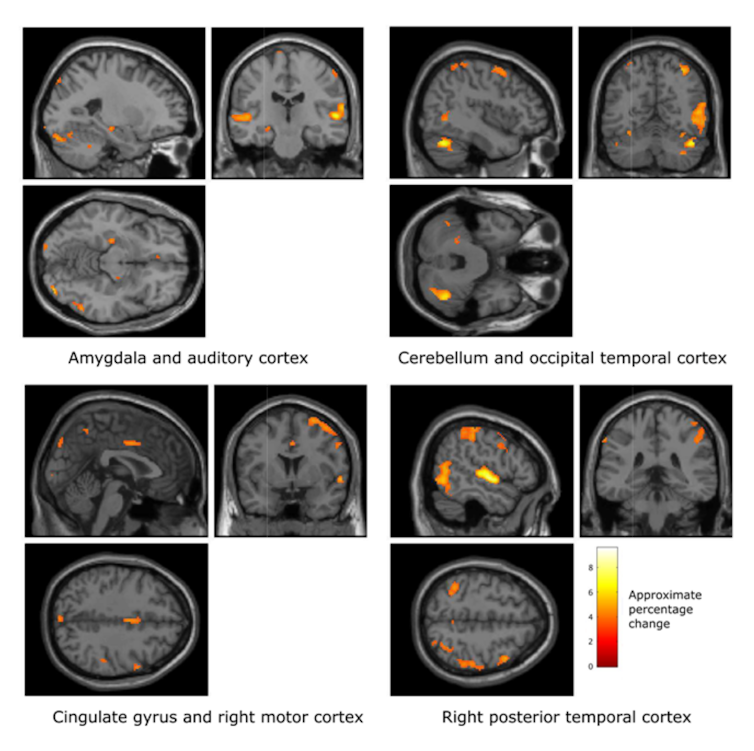

We first explored the neuroscience underpinning how our brains and bodies respond to music. In a series of studies, we investigated how our EEG, heart rate and other physiological processes change as we listen to music that evokes particular emotions. We also used brain scans (fMRI) to explore activity deeper within our brains.

We found a set of key neural and physiological processes to be involved in emotional responses to music. For example, we found additional evidence to support the well established hemispheric valence hypothesis, which describes how activity differs between the left and right halves of the brain as we experience emotion – with the left hemisphere being more active during positive emotional experiences.

We also found evidence that cortical activity captured in the EEG reflects changes in activity in inner brain regions, such as the amygdala. This suggests that the EEG may, in future, be used to estimate levels of activity in some sub-cortical brain regions.

This work has also taught us about how music affects our emotions. For example, we identified how the tempo of music acts to not only increase the level of excitement felt by a listener but also the amount of pleasure experienced by the listener – with higher tempo leading to more pleasure.

We then went on to develop a music generation system capable of producing a wide range of emotions in the listener. This algorithmic composition system is able to compose music in real time to target specific emotions. Our resulting brain-computer music interface is the world’s first demonstration of this technology and has been tested in healthy participants and, in a small case study, with one individual with Huntington’s disease, with good levels of success.

This system has numerous potential applications, including music therapy and music education. It could also aid the development of novel artistic or therapeutic systems.

Our system may be a first step towards new brain-driven music therapies, by enabling a better understanding of the relationship between our emotions and what happens in our brain as we listen to music. It also represents one of the first attempts at a new form of neural technology that is able to interface directly between our brain and music.

This post, by Dr Ian Daly from the University of Essex, Eduardo R Miranda, Professor in Computer Music at Plymouth University and Slawomir Nasuto, Professor of Cybernetics at the University of Reading, first appeared on The Conversation, 11 July 2019.

Professor Slawomir Nasuto is Deputy Research Division Lead of the Biomedical Sciences and Biomedical Engineering Division at the University of Reading. His research interests include computational neuroscience and neuroanatomy, analysis of signals generated by the nervous system single neuron and multivariate spike trains or EMG and their applications for Brain Computer Interfaces and Animats (robots controlled by neural cultures).