by Jon Weinbren, University of Surrey

[Obraz is the anglicised spelling of the Russian polyseme образ, which can be roughly translated as character, image, representation, likeness, or similitude.]

This is a whirlwind tour of a piece of research I had the pleasure of presenting at the Digital Humanities and Artificial Intelligence Conference in June 2024 at the University of Reading. In this work, I draw comparisons between the now widely familiar world of generative AI image creation and a somewhat underappreciated aspect of Konstantin Stanislavsky’s actor training system, known as ‘Active Analysis,’ which, despite its significance, has perhaps not received the broader recognition it deserves.

One of the challenges in delivering this paper was gauging the audience’s familiarity with both fields: the historical and methodological context of Stanislavsky’s system of actor training, and the intricate mechanics of diffusion-based generative AI. The room was full of very smart people with extensive experience in and knowledge of ‘Digital Humanities’ (a term whose precise meaning still slightly eludes me), but I was uncertain about how much explanation and contextualisation would be needed for the two areas I was drawing on.

My research had developed through my discourses with the ‘Stanislavsky Studies’ community, where I was accustomed to assuming the audience’s in-depth knowledge of this seminal Russian actor, director, trainer, and theatre impresario, who has shaped Western theatre and cinema acting practices for over a century. The ‘Stanislavskians’ I had engaged with were experts in the field, but I couldn’t make the same assumption about the audience in Reading. Therefore, I felt the need to adjust my presentation to include key information about Stanislavsky, his ‘system,’ and his significant legacy.

Conversely, when presenting to Machine Learning specialists at the University of Surrey and elsewhere, I could freely delve into the technicalities of Denoising Diffusion Probabilistic Models and the complexities of latent spaces and transformer architectures. However, for that particular audience to gain value from my presentation, I was compelled to provide something of a beginner’s guide to acting, emotion, and Stanislavsky’s approach to stagecraft and performative ‘realism’. And that was before I even broached the more advanced topic of ‘Active Analysis’, which was revolutionary even in Stanislavsky’s own iconoclastic contexts.

So, with this meta-analysis concluded, here is a brief and necessarily condensed outline of the paper, presented in a format that I hope will be appropriate and digestible for this medium.

Exploring Digital Acting: Bridging Stanislavsky’s Legacy with AI-Assisted Image Creation

As part of my ongoing research into digital acting, I’ve been fascinated by the unexpected connections between the time-honoured techniques of theatre and the cutting-edge capabilities of generative artificial intelligence (AI). In particular, I’ve been exploring how Konstantin Stanislavsky’s Active Analysis — a lesser-known yet ingenious aspect of his ‘system’ — shares surprising similarities with the way modern AI systems, like text-to-image generators, create visual content.

So, who was Stanislavsky, and what does his work have to do with AI?

Rediscovering Stanislavsky

Konstantin Stanislavsky (1863–1938) was a Russian theatre director and actor, best known for co-founding the Moscow Art Theatre. He developed what’s now known as the ‘Stanislavsky System,’ a comprehensive approach to acting that emphasised psychological realism and emotional truth in performance. A particular component of this system is Active Analysis, a rehearsal technique that’s all about improvisation and iterative refinement. Despite its profound impact, this technique isn’t as widely discussed as other elements of his work.

In simple terms, Active Analysis encourages actors to explore their characters through spontaneous enactments rather than just memorising lines. This method helps actors internalise their roles, allowing for performances that feel authentic and dynamic. It’s about asking, ‘What would I do if I were this character?’ — a question that leads to a performance that feels lived-in and genuine.

Stanislavsky’s approach to acting had already become revolutionary because it had moved away from the rigid, declamatory style of acting that was common in his time. Instead of focusing solely on the external presentation of a character, Stanislavsky urged actors to delve into their own emotions and experiences, using these as tools to bring their characters to life. This technique became the foundation for many acting schools worldwide, although it is important to note that Stanislavsky’s ‘system’ is often misinterpreted and oversimplified in its American adaptation, known as Method Acting, developed by Lee Strasberg.

While Strasberg’s Method Acting emphasises emotional memory and personal experience as the primary tools for an actor, Stanislavsky’s system was broader and more flexible, incorporating a variety of techniques to help actors create believable characters. His work is more focused on the actor’s active engagement with the text, the given circumstances, and the dynamics between characters, rather than solely on the actor’s personal emotions.

The Ingenuity of Active Analysis

Active Analysis represents one of Stanislavsky’s most innovative contributions to rehearsal techniques, yet it remains relatively uncelebrated in discussions about his work. This method emerged as a practical solution to help actors immerse themselves in their roles more deeply. By encouraging actors to improvise around the script’s ‘given circumstances’ and iteratively refine their performances, Stanislavsky was able to foster a deeper connection between the actor and the character, leading to more authentic portrayals on stage.

What makes Active Analysis particularly ingenious is its emphasis on the iterative process. Rather than rehearsing scenes in a fixed, linear way, actors were invited to explore different possibilities, to ‘play’ within the role, and to allow their performances to evolve organically over time. This technique not only made performances more dynamic but also helped actors discover new facets of their characters that they might not have found through more traditional methods.

But how does this century-old method relate to artificial intelligence, you ask?

The AI Connection

As someone deeply interested in ‘digital acting’, in other words empowering autonomously animated characters with their own acting capabilities, all things AI are very much in the frame for me. This is one of the many reasons why I have been looking closely at generative AI systems and how they could be used in this context. My goal is to explore how AI can enhance or even replicate the nuanced performances typically associated with human actors.

It was during these investigations that some uncanny resonances emerged between Stanislavsky’s Active Analysis and the processes involved in generative AI text-to-image systems. Tools like DALL-E, Midjourney, Flux and Leonardo.ai, all of which generate images based on text descriptions, rely on a fascinating process called diffusion.

Here’s how it works in a nutshell:

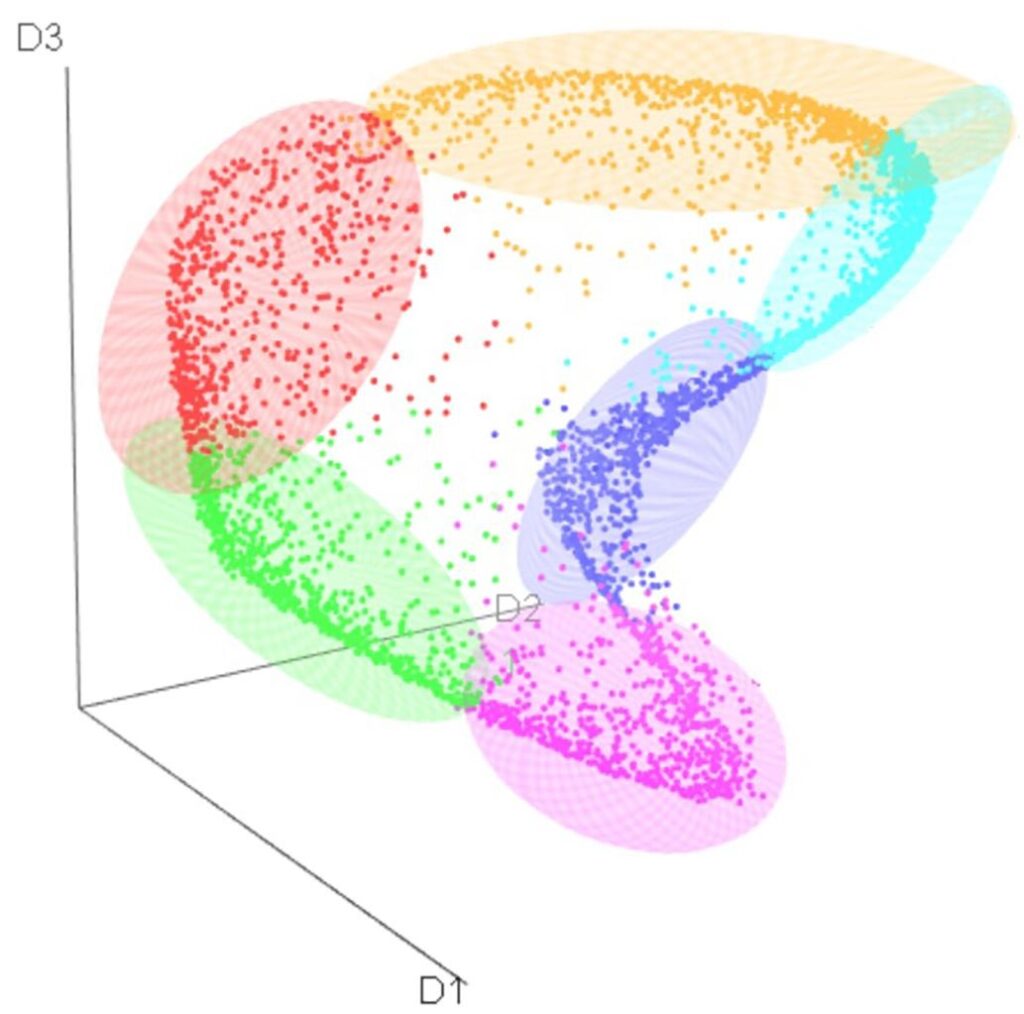

- Text Encoding and Latent Space: When the AI reads a text prompt, it first converts the prompt into a numerical representation known as an embedding. This embedding is then placed into what’s called Latent Space, a high-dimensional mathematical space where each dimension represents different features or attributes of the data. In Latent Space, concepts that are similar or related are positioned closer together, while those that are different are further apart. For example, if the prompt is ‘a cat riding a bicycle,’ the AI maps this concept into Latent Space where it can capture the essence of both ‘cat’ and ‘bicycle’ and their relationship.

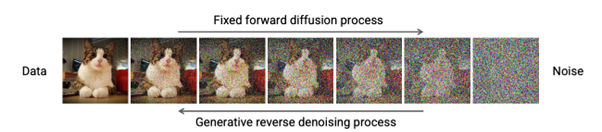

- Starting with Noise: The image creation process begins with a random, noisy image — think of it like static on an old TV screen. This noise is also represented in Latent Space, but it initially occupies a position far from the structured concept encoded by the text.

- Denoising Process: The AI then begins the process of denoising, where it gradually refines the noisy image by adjusting its position in Latent Space. Each adjustment is guided by the text prompt’s embedding, moving the image closer to the desired outcome. As the image is refined step by step, the AI aligns it with the features and attributes described in the text prompt, until a coherent and recognisable image emerges.

Figure 3 – Denoising and Reverse Denoising - LORAs and Direction: Just as a director might guide an actor’s interpretation of a role, LORAs (Low-Rank Adaptation of Large Language Models) can be used to modify and fine-tune the AI’s output to match specific styles or artistic directions. This is akin to how a director shapes the performance to align with a particular vision or style, ensuring that the final output — whether a performance or an image — reflects a cohesive and deliberate creative approach.

Latent Space is a critical concept here because it allows the AI to navigate through a vast landscape of possible images, making informed decisions about how to transform noise into a meaningful visual representation based on the text.

Equally important are LORAs, which enable the AI to fine-tune its outputs to match specific styles or artistic directions, much like a director guiding an actor’s performance to achieve a desired creative vision. This iterative process of refinement, where an image slowly emerges from a chaotic mess of pixels, is strikingly similar to how actors, through Active Analysis, refine their performances from initial improvisations into something coherent and powerful.

Why This Matters to Digital Acting

You might be wondering, why is someone who studies digital acting interested in these parallels between AI and theatre? Well, it’s all about understanding the deeper principles of creativity and performance — whether they’re being carried out by humans or machines.

Both Stanislavsky’s Active Analysis and AI diffusion models rely on iterative refinement. Actors don’t just get it right on the first try. They rehearse, they explore different possibilities, and they adjust their performances until everything clicks. Similarly, AI doesn’t generate a perfect image right away — it gradually shapes the noise into something recognisable, guided by the input text.

In both cases, the process is guided by experience and data. For actors, this means drawing on their personal experiences and emotional responses. For AI, it means relying on vast amounts of training data — millions of image-caption pairs that teach it how to associate words with visuals.

But beyond these technical similarities, what really excites me is the idea that both actors and AI systems are, in a sense, engaging in a form of ‘creative improvisation.’ For actors, this improvisation is rooted in the moment-by-moment decisions they make during rehearsal — decisions that are influenced by their understanding of the character, the context of the scene, and their interactions with other actors. For AI, the improvisation comes from the way it navigates the vast, complex space of possible images, guided by the constraints of the text prompt.

This connection between human and machine creativity opens up exciting possibilities for digital acting. Imagine an AI system that could generate not just images, but entire performances — performances that could then be interpreted and refined by human actors. Or consider how AI-generated imagery could be used to inspire new approaches to character development, set design, or even the writing of scripts.

Expanding the Horizon of Creativity

The intersection of Stanislavsky’s Active Analysis and AI diffusion models also prompts us to rethink what we mean by ‘creativity.’ Traditionally, creativity has been seen as a uniquely human trait — a product of consciousness, emotion, and personal experience. However, the success of AI systems in generating art, music, and now even performances, challenges this notion.

Does this mean that machines can be truly creative? That’s still a matter of debate. But what’s clear is that AI can engage in processes that are remarkably similar to human creativity. By iteratively refining their outputs, guided by data and algorithms, AI systems can produce results that are both novel and meaningful — qualities that are central to our understanding of creativity.

In the context of digital acting, this suggests a future where human and machine creativity are not in competition, but in collaboration. By blending the strengths of both, we could develop new forms of artistic expression that go beyond what either could achieve on their own.

For example, AI could be used to generate a range of possible interpretations of a character, which an actor could then explore and adapt in rehearsal. Or AI-generated environments could provide dynamic backdrops that respond in real-time to the actions of performers, creating a more immersive and interactive theatre experience.

Looking Ahead

As AI continues to advance, the boundaries between machine-generated and human-created art will blur. By exploring the resonances between traditional artistic practices and AI, we can develop new methods of collaboration that benefit both fields. Whether through digital avatars that ‘perform’ with the nuance of a trained actor or AI systems that generate concept art, the potential for synergy is vast.

In conclusion, while Stanislavsky could not have imagined the technological tools we have today, his emphasis on the iterative, experiential nature of creativity resonates deeply with the processes driving AI innovation. As we continue to explore these intersections, we may find that the future of creativity lies not in choosing between human or machine, but in the harmonious integration of both.

For those involved in ‘Digital Humanities’, this is an exciting frontier. We’re blending the traditional with the technological, exploring new ways to create and perform. Whether you’re a theatre enthusiast or a tech aficionado, there’s something truly fascinating about watching these worlds come together.