Knowledge Base

- /

- /

- /

RACC2 – Login and Interactive Computing

RACC2 – Login and Interactive Computing

Logging in to the RACC2

You can log into the Reading Academic Computing Cluster (RACC2) with the following command:

ssh -X USERNAME@racc.rdg.ac.uk

The ‘-X’ option enables X11 forwarding, which allows the running of applications with graphical user interfaces (GUIs) such as text editors or graphics displays in data visualisation tools. See Access to ACT Servers via an SSH Client for more information and important security related recommendations.

To connect from a Windows computer you will need an SSH client such as MobaXterm. Note that there is a portable edition that does not require administrator rights to install. To connect from a Mac you will need to install XQuartz before X11 forwarding will work with the native Terminal app. If you are using Linux, including our Linux Remote Desktop Service, SSH and X11 forwarding should work without any additional configuration. To access the RACC2 from our Linux Desktop Service, you can follow the steps in the user guide article.

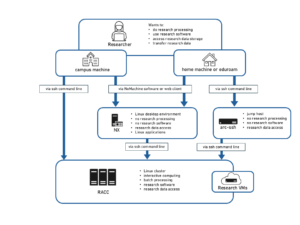

If you need to connect from an off-campus machine, the available options are described here.

Interactive computing on the login nodes

The above ssh command will put you on one of the RACC2’s login nodes. There’s more detailed information about the layout of the cluster in the RACC introduction article, but in summary, the login nodes are your direct access points to the RACC2, whereas the batch nodes run your jobs without your direct interaction.

You don’t choose the specific node that you log in to yourself. The address racc.rdg.ac.uk acts as a load balancer and it will connect you to the least loaded login node. Both CPU load and memory use are considered in the load balancing process. Currently we have 5 login nodes with decent multicore CPUs and 386GB of memory each. They are suitable for low-intensity interactive computing, including short test runs of research code and interactive work with applications like Matlab, IDL, etc.

Please remember the fair usage policy on the RACC2: The login nodes are shared among all users, so please do not oversubscribe the resources. Small jobs as outlined above are ok, but bigger jobs should be run in batch mode.

Login node sessions are limited to 12 CPU cores and 256 GB of memory per user. The CPU limit is enforced in such a way that all your processes on the node will share just 12 cores. If you run code that tries to use more than 12 processes or threads for numerical processing, it will be inefficient and slower than using just 12 processes. Regarding the memory limit, if your processes exceed the allocation, one of the processes will be killed.

If you notice that the login node becomes slow, you can try disconnecting and connecting to another node via the above ssh command, which selects the least used node by load balancing.

Background jobs and cronjobs are not permitted on the login nodes. If you need to run a cron job, please use our cron job node racc-cron on the old RACC.

Storage

Unix home directories have limited data storage, but can be used for small scale research data processing. More storage space for research data can be purchased from the Research Data Storage Service.

The storage volumes are mounted under /storage./research.

100 TB of free scratch space is provided at /scratch2 and /scratch3. Please note that data on the scratch storage is not protected and it might be periodically removed to free up space (right now periodic removals are not implemented). Scratch storage should only be used for data which you have another copy of, or which can be easily reproduced (e.g. model output or downloads of large data sets). User directories can be requested by raising a ticket to DTS.

Software installed on the RACC

Software installed locally is managed by environmental modules, see our article on how to access software on the cluster for more information. The modules available on the RACC2 are not the same modules as those on the legacy RACC.

Some scientific software packages are installed on the system from rpms, and do not require loading a module. They are available to call from command line when you log in without any extra set up.

You can find further useful, software specific information in these articles: Python on the Academic Computing Cluster and Running Matlab Scripts as Batch Jobs.