Knowledge Base

- /

- /

RACC2 announcement – The new Reading Academic Computing Cluster

RACC2 announcement – The new Reading Academic Computing Cluster

Introduction to the new cluster

The new Reading Academic Computing Cluster (RACC2) is now available for use. Users are encouraged to log in and make use of the new facilities. Interactive computing and batch jobs work in the same way as on the RACC and experienced users will find that migrating to RACC2 should be straightforward.

Currently, the RACC2 documentation is in progress and users are referred to the documentation of the RACC while we prepare updated manuals for RACC2. In cases of missing software and libraries or if you notice problems with interactive computing or batch jobs, please let us know by raising a ticket to DTS.

Logging in to RACC2

You can login to RACC2 with the following command:

ssh -X USERNAME@racc.rdg.ac.uk

The particulars of logging in to the new cluster are the same as for the original RACC.

The ‘-X’ option enables X11 forwarding, which allows the running of applications with graphical user interfaces (GUIs) such as text editors or graphics displays in data visualisation tools. See Access to ACT Servers via an SSH Client for more information and important security related recommendations.

To connect from a Windows computer you will need an SSH client such as MobaXterm. Note that there is a portable edition that does not require administrator rights to install. To connect from a Mac you will need to install XQuartz before X11 forwarding will work with the native Terminal app. If you are using Linux, including our Linux Remote Desktop Service, SSH and X11 forwarding should work without any additional configuration.

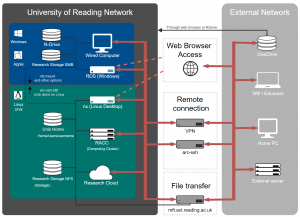

If you need to connect from an off-campus machine, the available options are described here.

Interactive computing and batch jobs

Interactive computing and batch jobs are analogous between the two clusters. For more information, refer to the RACC user guides on interactive computing and batch jobs. It might be that there are some

Background jobs and cronjobs are not permitted on the login nodes. If you need to run a cron job, please use our cron job node racc-cron.

Storage

RACC2 sees the same storage as the RACC. This includes user home directories, Research Data Storage and scratch storage.

The research data storage volumes are mounted under /storage and scratch storage volumes are provided under /sratch2 and /scratch3.

Please note that data in scratch space is not protected and it might be periodically removed to free up space (right now periodic removals are not implemented). Scratch storage should only be used for data which you have another copy of or that can be easily reproduced (e.g. model output or downloads of large data sets). User directories will be created on request when using scratch and not other types of data storage is justified.

Software installed on RACC2

Software on RACC2 is also managed by environmental modules, see our article on how to access software on the cluster for more information. The modules available on the RACC2 are not the same modules as those available on the RACC.

If you notice software that has previously been available on the RACC but it’s missing from the modules on RACC2, check that it’s available from command line. We have a broader range of software packages and libraries available on RACC2 directly from command line without loading modules. If you do find that anything is missing, that we should be providing some software centrally or that something isn’t working as expected, please let us know by raising a ticket to DTS.

You can find further useful, software specific information in these articles: Python on the Academic Computing Cluster and Running Matlab Scripts as Batch Jobs.