Knowledge Base

- /

- /

- /

- /

openIFS@Reading

openIFS@Reading

Introduction

The ECMWF OpenIFS programme provides an easy-to-use, exportable version of the IFS in use at ECMWF for operational weather forecasting. For more information about the model itself, please visit the ECMWF OpenIFS website.

If you want to start using the OpenIFS on the Reading Academic Computing Cluster (RACC), please follow the instructions below. You don’t need to install it from source code from ECMWF, there is a pre-configured version available on the RACC already.

Join our OpenIFS mailing list to receive updates on OpenIFS@Reading progress and to post to the OpenIFS users group.

1. Obtaining and compiling the OpenIFS on the Reading Academic Computing Cluster (RACC) (Version 40r1)

Please note that the instructions in this section and the following are to download OpenIFS Version 40r1, which is currently still used for teaching. If you wish to use the new release 43r3, please amend the instructions according to section 3 on v43r3.

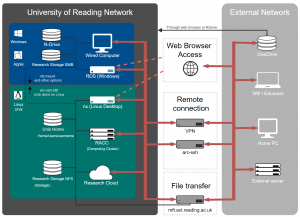

Once you’re logged in to the RACC (see here how to do this), you’ll need to download a few scripts to acquire, compile and run OpenIFS. You can copy those from the following location:

/storage/silver/metdata/models/openIFS/oifs_repo

The 3 scripts in the above directory are:

get_oifs.sh – This downloads the OpenIFS model code and a sample experiment setup.

setup_oifs – This sets up your OpenIFS model environment.

compile_oifs.sh – This compiles your model code.

These scripts need to be run in succession. Make sure you are not in your home directory when you run these. An OpenIFS model run needs quite a bit of space and the storage platforms for the home directories are not designed to store the input to or output from jobs running on the cluster as this type of usage can cause problems when you fill your home directory up.

First, execute get_oifs.sh:

./get_oifs.sh

This will download the model code along with an example configuration which you can use to check if your setup is working. Next, the shell environment needs to be set up. To do this, type:

source setup_oifs

You should see a screen message displaying the currently loaded modulefiles, as well as some important OpenIFS directory paths that are set up with the above command.

Now you can compile the model by executing the compile_oifs.sh script. You should only need to do this once if you’re not making changes to the source code.

./compile_oifs.sh

You will see a long sequence of messages as each piece of code is compiled in turn. This may take a little while and as long as messages keep scrolling though then all is fine. At the end you should see a few summary lines telling you how successful the compilation was. If you see failed=0 in there for all occasions then the compilation worked.

2. Running the test example

First, navigate to the experiment directory. It was created where you ran the get_oifs.sh script.

cd oifs_experiments/2014021112

In there, you’ll see 2 scripts which you need to run the model:

ifs_job.sh – This contains all the options necessary to run the model.

submit_job.sh – This submits your model to the job queue to run on the cluster.

You will need to edit ifs_job.sh and change the variable OIFS_EXPDIR to the path of the directory you’re currently in (the experiment directory). No other settings need to be changed to run this test example. The default setup is the ‘giq5’ experiment with a 255 resolution number. It runs the model executable ‘master.exe’ (compiled with compile_oifs.sh in oifs_model/make/gnu-opt/oifs/bin/) with a timestep of 3600s on 12 processors for a forecast length of 2 days. It will save the results in a directory called output_1 for the first run. For subsequent runs you should change the OIFS_RUN number so that the results don’t get overwritten.

You’re now ready to run the model! Simply execute the submission script:

sbatch submit_job.sh

This submits your model to the batch queue on the cluster. You can track the progress of the job via the logfile (myout.txt in this example) that is created in the directory you’re in. You can check the status of the job on the cluster with the ‘squeue’ command (see the RACC User Guide for further info.)

3. Amendmends of the above instructions to download OpenIFS v43r3

The download, compilation and testing of the OpenIFS 43r3 release is set up to follow the same principles as that of the 40r1 version described in the sections 1 and 2 above. The source code can be obtained and compiled with the same commands, except that their location is slightly different. OpenIFS 43r3 on the RACC is also supplied with a set of initial condition files, similar to the test example above which can be used to test the setup. If you don’t wish to download/run the test case, you can amend the ‘get_oifs.sh’ script and remove the example acquisition part.

You can copy the OpenIFS 43r3 scropts from the following location:

/storage/silver/metdata/models/openIFS/oifs_repo_43r3

Now, the rest of the commands in section 1 can be executed, as the scripts for the download, shell environment setup and compilation of OpenIFS 43r1 are the same as that for 40r1.

The test case can be run in a similar way to that of section two, except that the experiment has a different start date and hence a different directory name.

The experiment directory was created where you ran the get_oifs.sh script. You can navigate to the test case with

cd oifs_experiments/1998012900

The 2 scripts which you need that submit and run the model are the same:

ifs_job.sh – This contains all the options necessary to run the model.

submit_job.sh – This submits your model to the job queue to run on the cluster.

Edit ifs_job.sh to suit your needs but if you just want a quick check to see if your compilation of OpenIFS 43r3 is running ok, all that needs editing is OIFS_EXPDIR to match the path of your experiment directory. You can then just run the test case with:

sbatch submit_job.sh

This submits your model to the batch queue on the cluster. You can track the progress of the job via the logfile (myout.txt in this example) that is created in the directory you’re in. You can check the status of the job on the cluster with the ‘squeue’ command (see the RACC User Guide for further info.)

NOTE FOR 43r3: Most variables don’t seem to transfer from the ifs_setup.sh to the model run hence the namelist needs to be checked/amended. Most values such as the timestep and experiment ID should be correct in the namelist already but the value of the forecast length needs to be changed in the namelist e.g. CUSTOP=’d5′, for a forecast length of 5 days.